Eliminating Duplicate Data with Effective Data Modeling in Power BI

How to eliminate duplicate data using an effective data modelling strategy in Power BI

Solomun Beyene

12/20/20244 min read

Eliminating Duplicate Data with Effective Data Modeling in Power BI

Introduction

In the realm of data analytics, the presence of duplicated data can lead to inaccurate insights, poor decision-making, and wasted resources. This issue often stems from poor or nonexistent data modeling practices. In this article, we will explore how robust data modeling can resolve the problem of duplicated records and how Power BI can be used to implement these solutions effectively.

The Problem with Duplicated Data

Duplicated data is a common issue and in some cases this arises due to poor data modeling or the absence of a data model altogether. This problem can manifest in various ways:

Inaccurate Reporting: Duplicated records can distort analytical results, leading to false conclusions.

Increased Storage Costs: Storing duplicate data unnecessarily increases storage requirements.

Data Integrity Issues: Maintaining data quality becomes challenging, impacting overall data integrity.

Causes of Duplicated Data:

Lack of unique identifiers or primary keys.

Merging data from multiple sources without proper deduplication.

Data entry errors and inconsistencies.

How Great Data Modeling Can Fix Duplicated Records

Effective data modeling addresses the issue of duplicated data by implementing structured and organized schemas. Here’s how:

Using Power BI to Fix Duplicate Data Issues

Firstly we will create our conceptual model as this will give us a blueprint to how our structure will look like with all the key entities of the business. This can easily be done with a diagram in excel:

Firstly have a look at your original data (Fact Table) which you will be creating your attribute tables (Dimension Table) from and evaluate what tables you would create. The dimension tables are a descriptive attribute that defines how a fact should roll up building a relationship between the too.

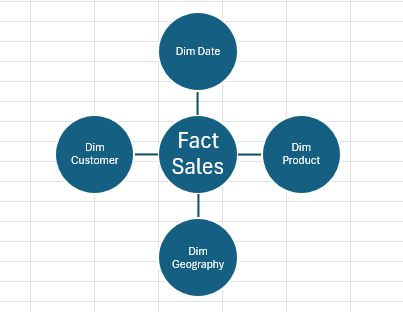

Next, go to excel > Insert > Smart art > Relationships to build yourself a conceptional table. Below you can see that I'm designing my model as a star schema. Its easy to create and its efficient to query with less joins. I've chosen my Dim tables (Dimensional tables) which all have a relationship with the Fact Sales table

Conceptional Model

Now that I have my conceptional table, I can move to Power BI to build my logical table

Power BI provides powerful tools for creating effective data models, which can help in identifying and resolving duplicate data issues. Here’s how you can use Power BI for this purpose:

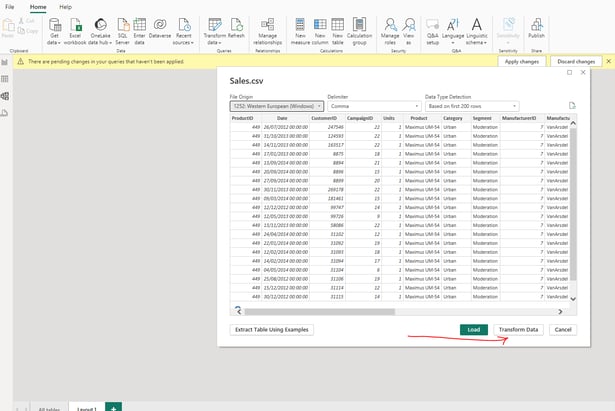

Connect to Data Sources: Import data from various sources (e.g., SQL Server, Excel) into Power BI.

Loading Data

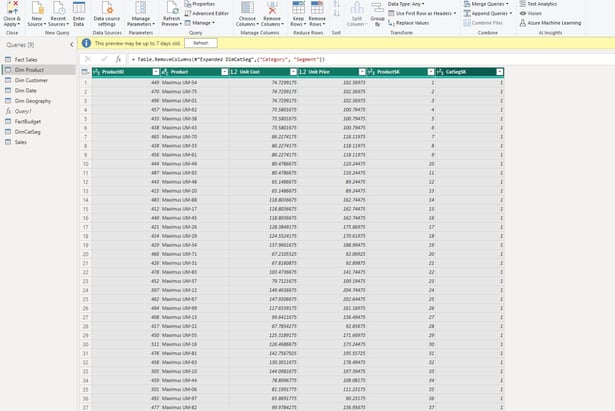

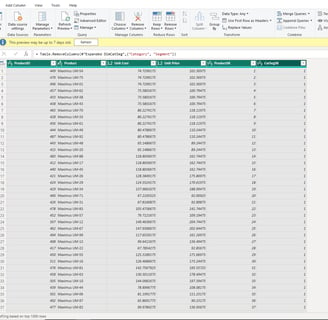

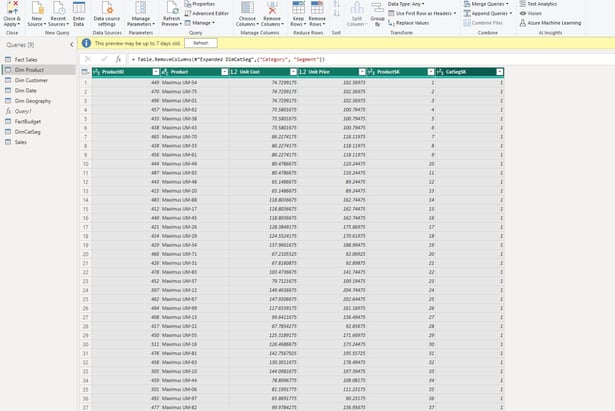

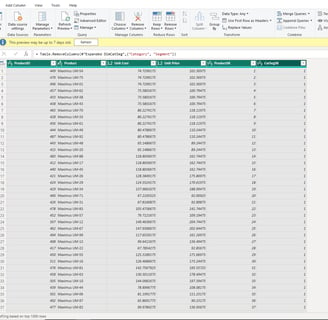

Transform and Clean Data: Use Power Query Editor to clean data by removing duplicates, filtering rows, and correcting data types. This is a really important step and in this step each Dim table you create from your Fact table will have duplicates and this is when you remove this duplicates. This is the first step of when we began to eliminate those duplicates from source level.

Transforming Table

This point, I will duplicate my Fact table and create my Dim tables just as I illustrated in my conceptional model that I made in Excel. In each Dim table I will have columns that is related to that attribute. All columns not associated with the attribute, I will remove columns. For example:

Dim Product - Columns associated are ProductID, Product, Unit Cost, Unit Price etc. I would do this with each of my Dim tables. Then in each Dim table I will clean the dataset. One of the first thing I would do is to highlight my columns and right click then remove duplicates.

Deduping Table

In each of my Dim tables I will remove duplicates as I want my Dim tables to be unique whiles the Fact table will have the duplicates. This is how we create the one to many relationships.

Just to keep this in mind, apart from deduping your datasets, its important also to check the tables you create and carry out necessary cleaning if needed.

Creating unique ID's if needed (build relationships in your column when you doing the joins)

Cleaning values in column

Change formats of columns

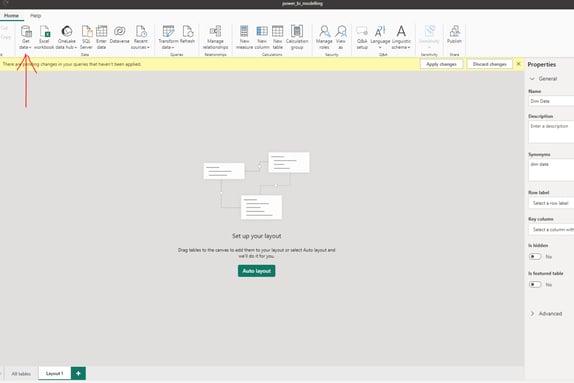

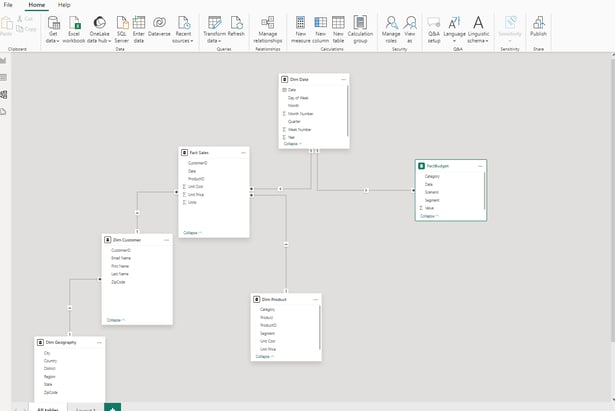

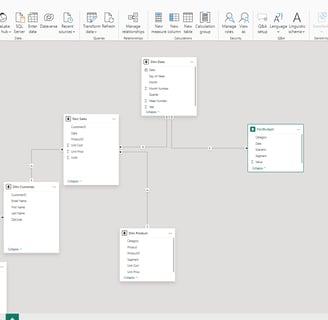

Define Relationships: Once you have your Dim tables and the cleaning process is completed, go to close & apply.

Clean table

Then will then produce your logical model like below. As each column has its unique ID, were able to create relationships between the tables we created.

Logical Model

From the example above, I've also added a Dim Date table so that we can easily filter through our tables via dates and I've added a Fact Budget table to show that we can build on top of what we created to add more relationships to our model. This is key because we want our model to be able to grow as we want in time.

The Result from Effective Data Modeling in Power BI

By leveraging effective data modeling practices in Power BI, organizations can achieve:

Clear structure of your data and the relationships in between. This will now lead the way to build a Physical model using a database tool of your choice.

Clear Data quality rules - No duplications, inconsistent formats issues or inconsistent data values

Ready for the ETL production stage were you can build your workflow knowing you have a blueprint to build an effective and efficient workflow pipelines.

Conclusion

Duplicated data can significantly hinder the quality and reliability of business insights. However, by implementing robust data modeling practices and leveraging Power BI's powerful tools, organizations can effectively manage and eliminate duplicates. This leads to accurate, cost-efficient, and high-integrity data, empowering better decision-making and business outcomes.

Have you integrated logical data models into your ETL processes? Share your experiences and insights in the comments below!